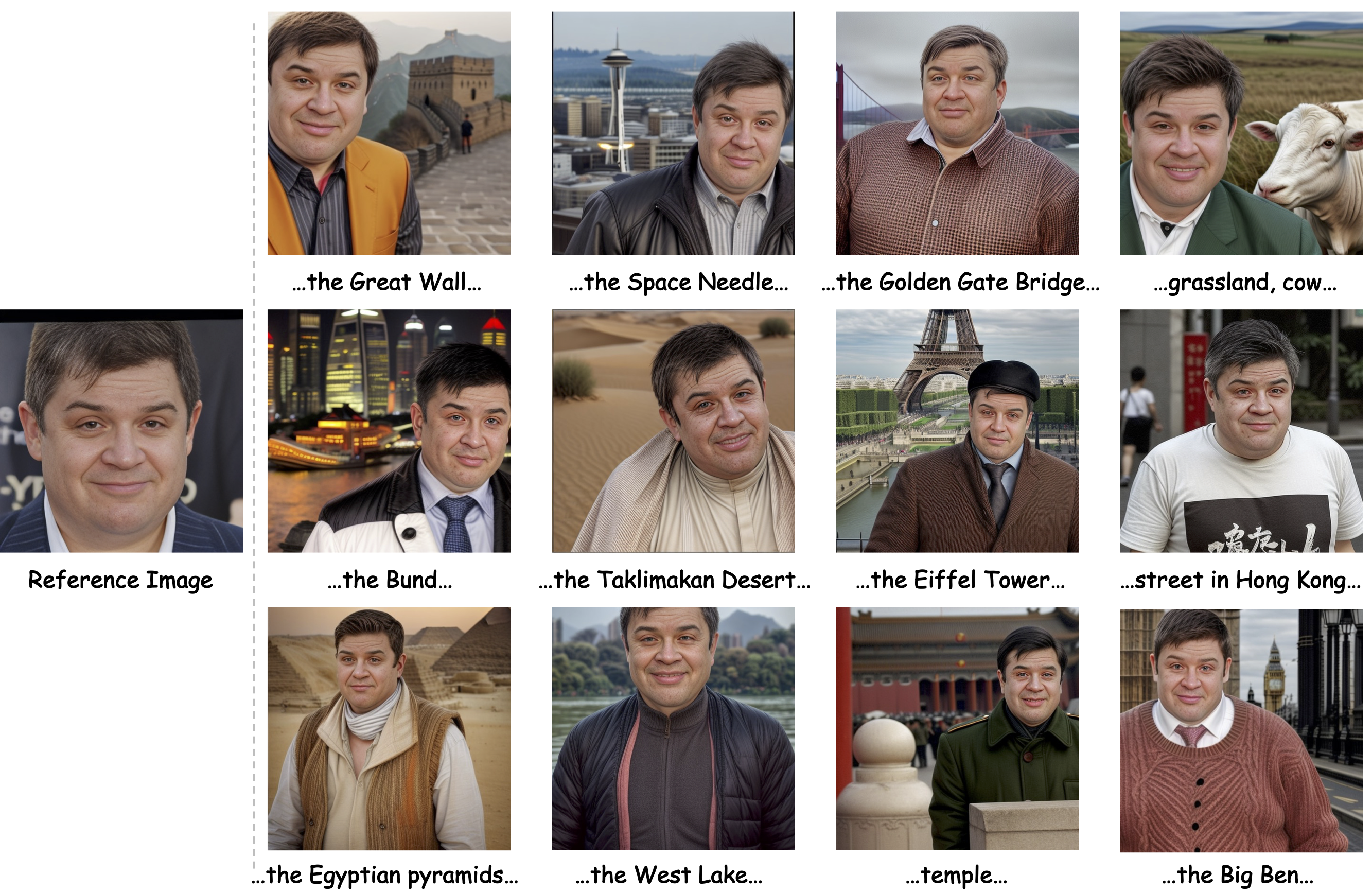

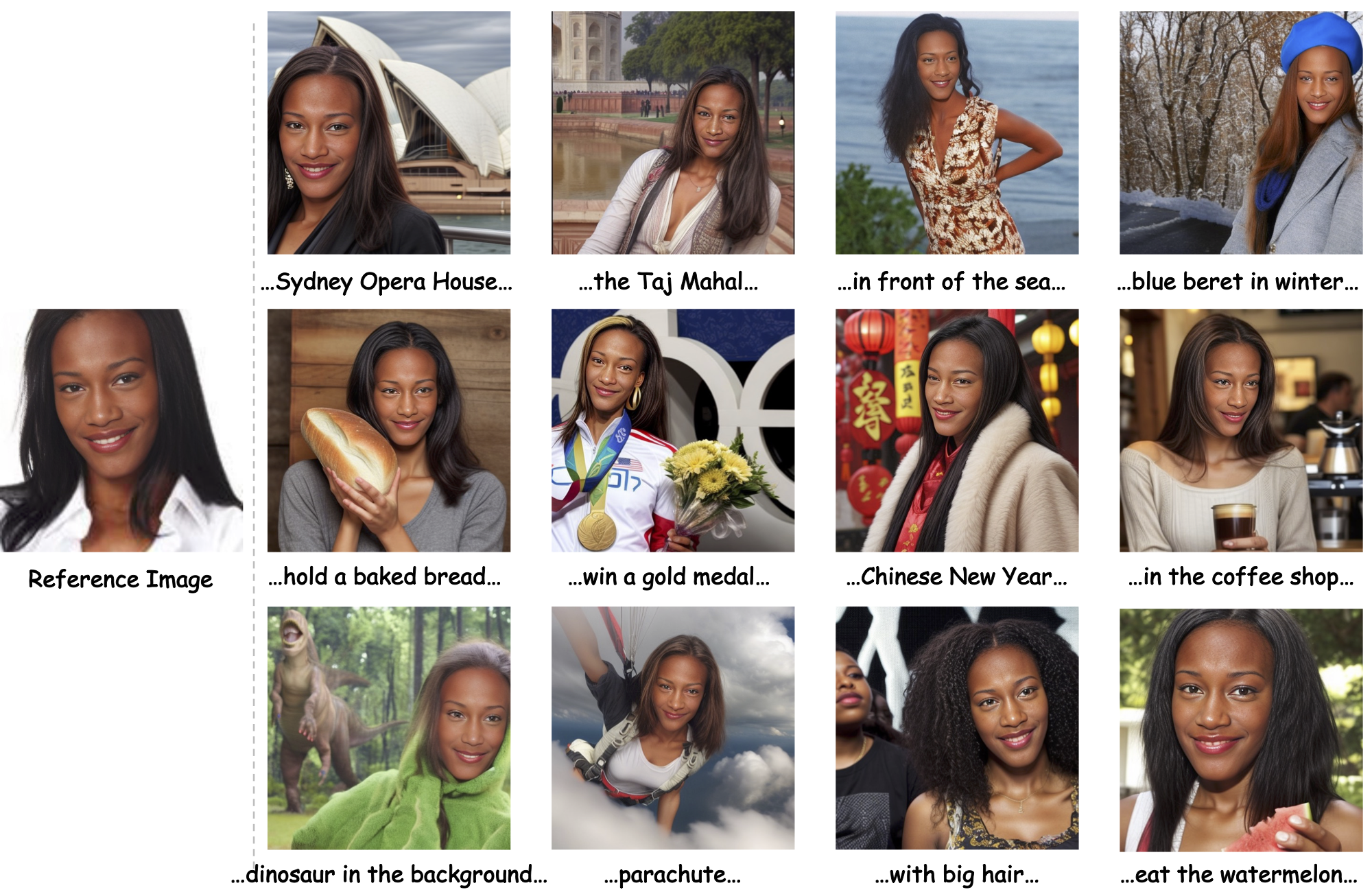

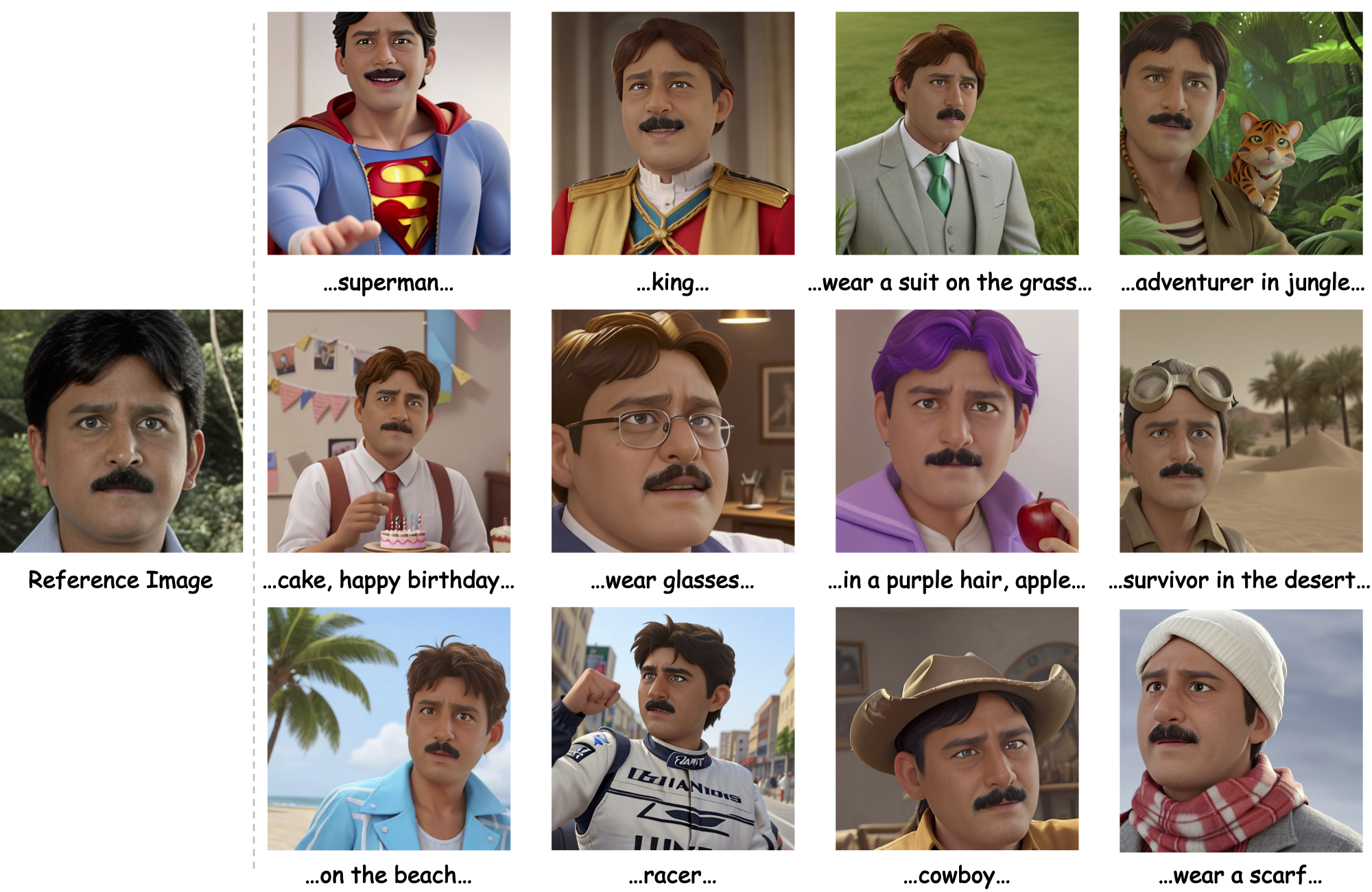

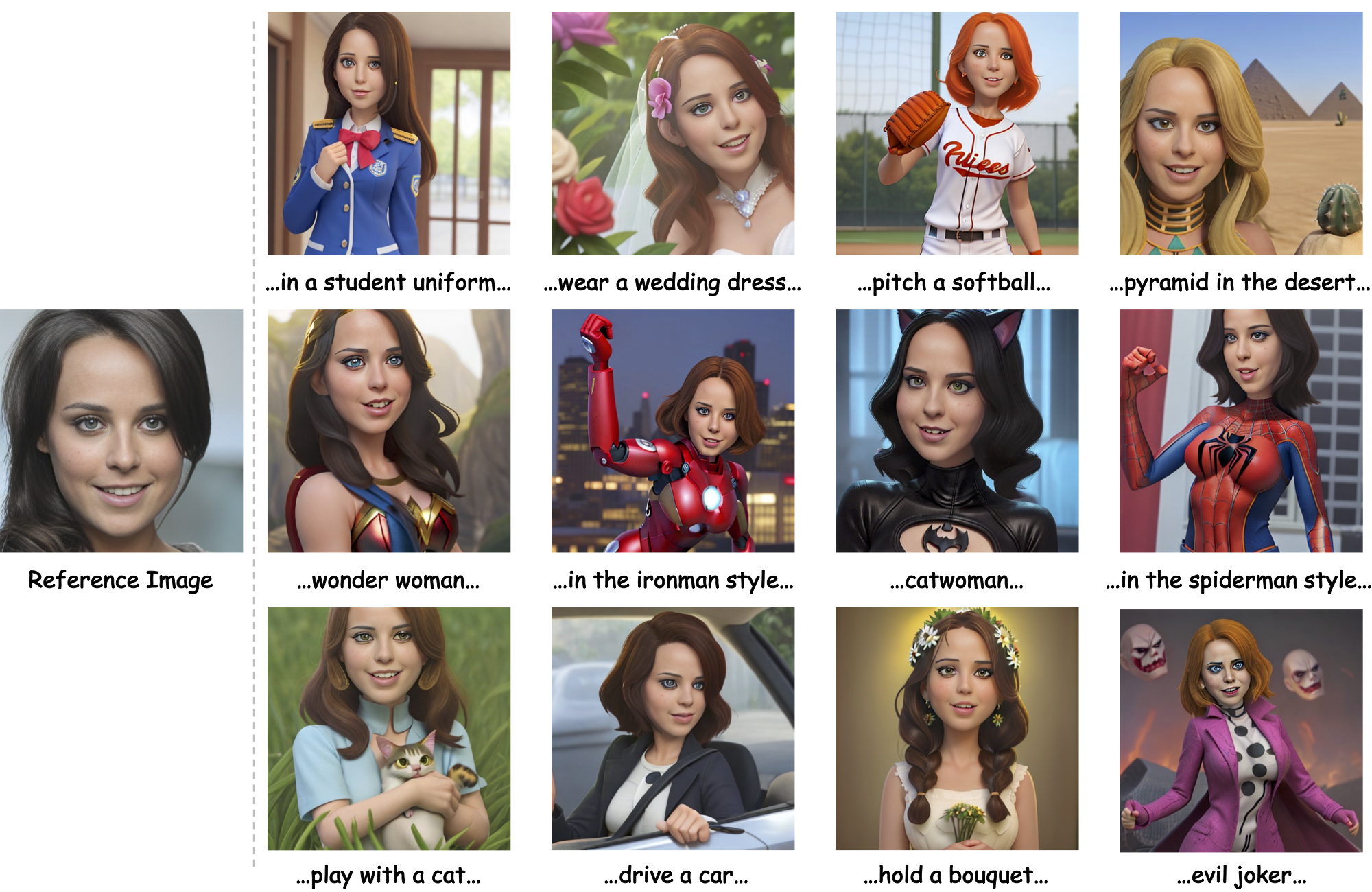

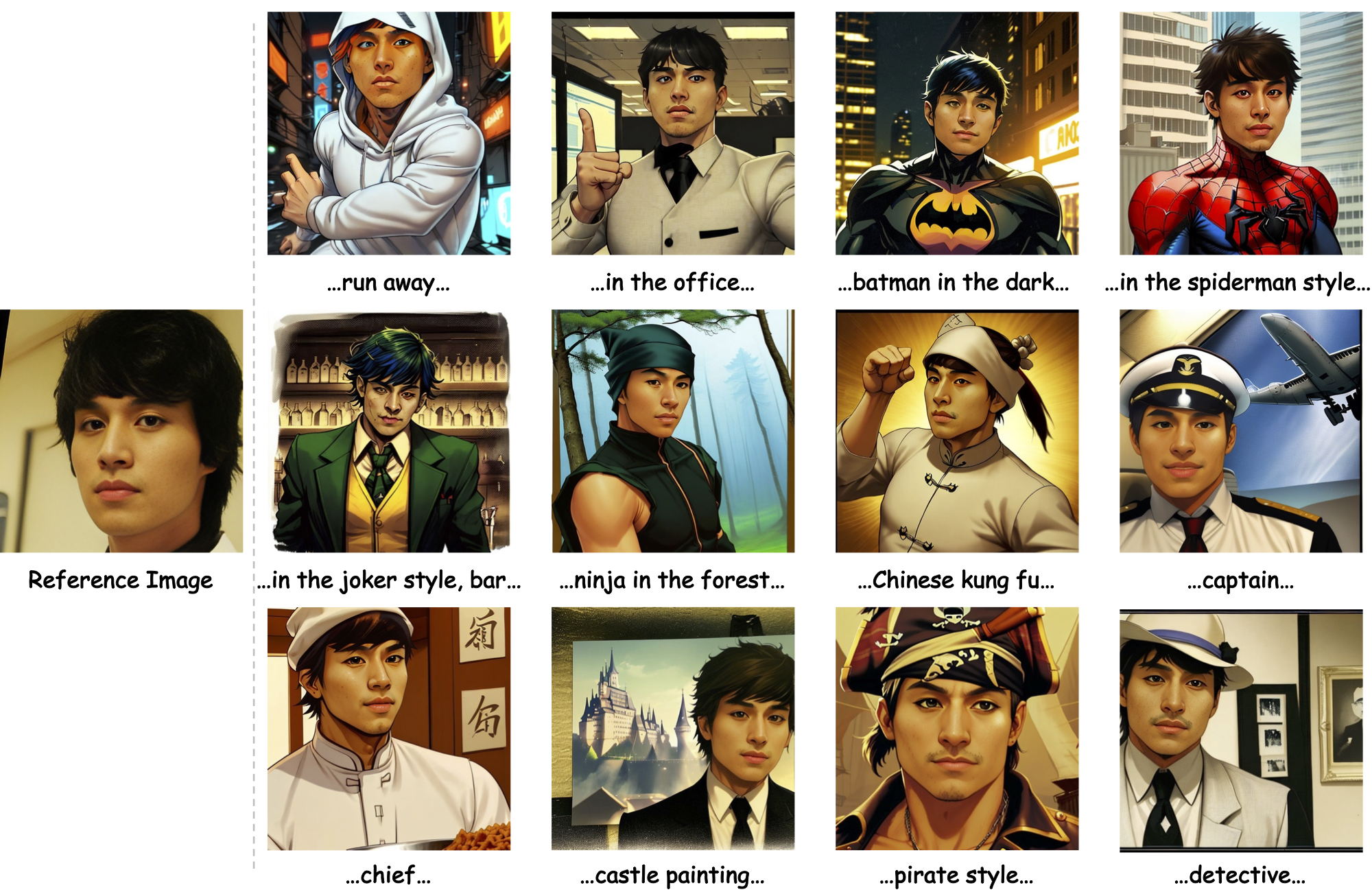

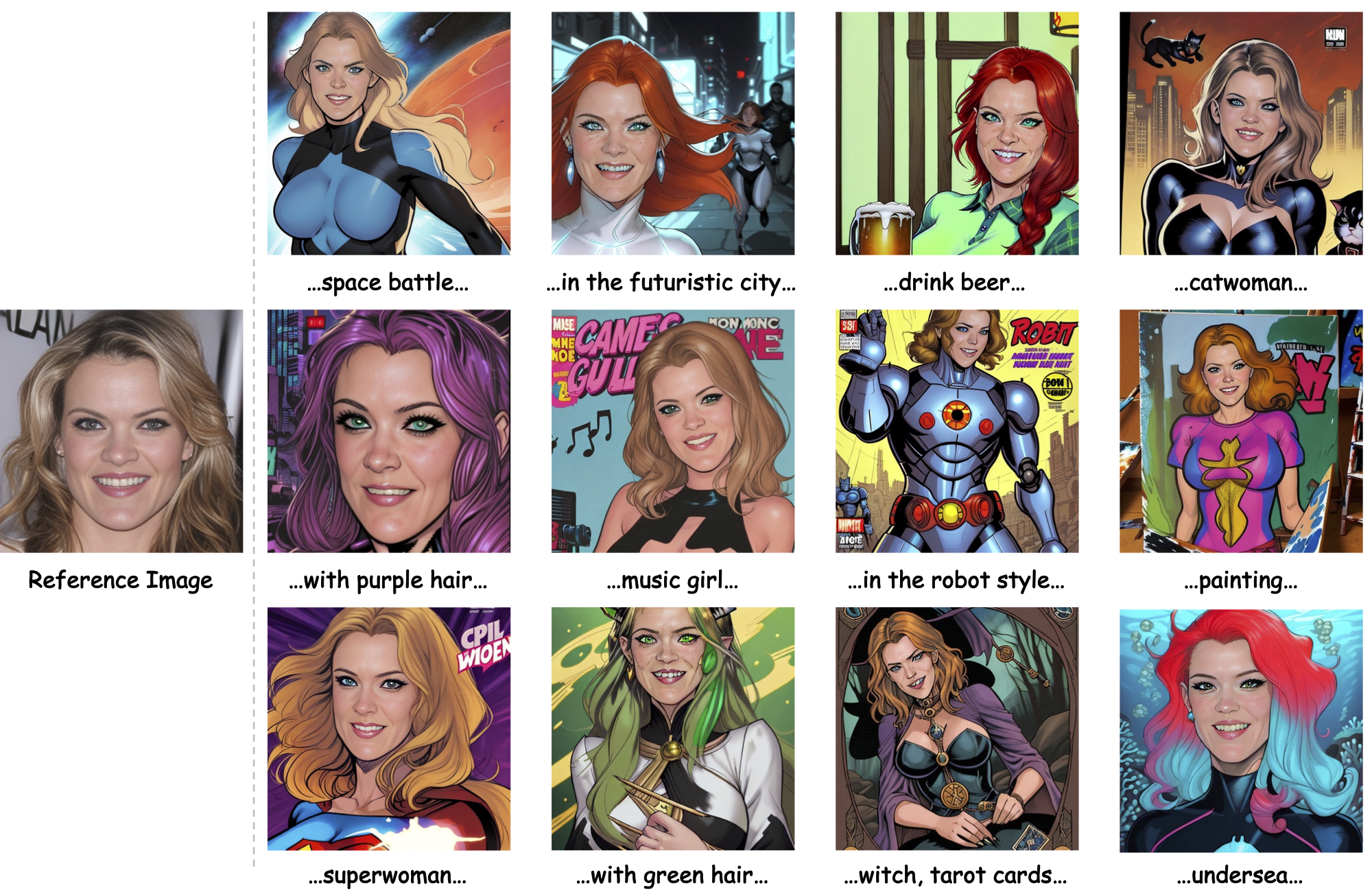

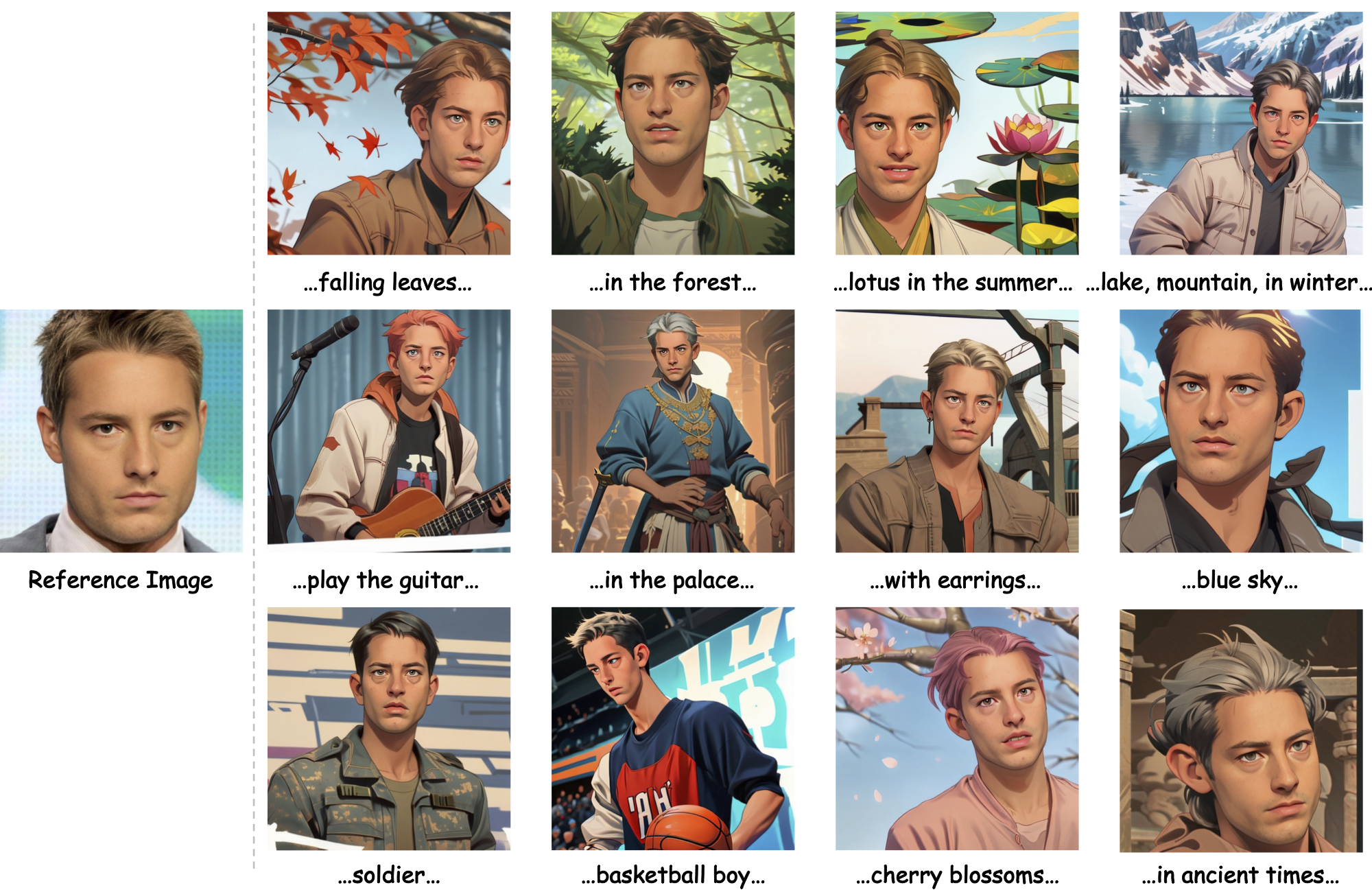

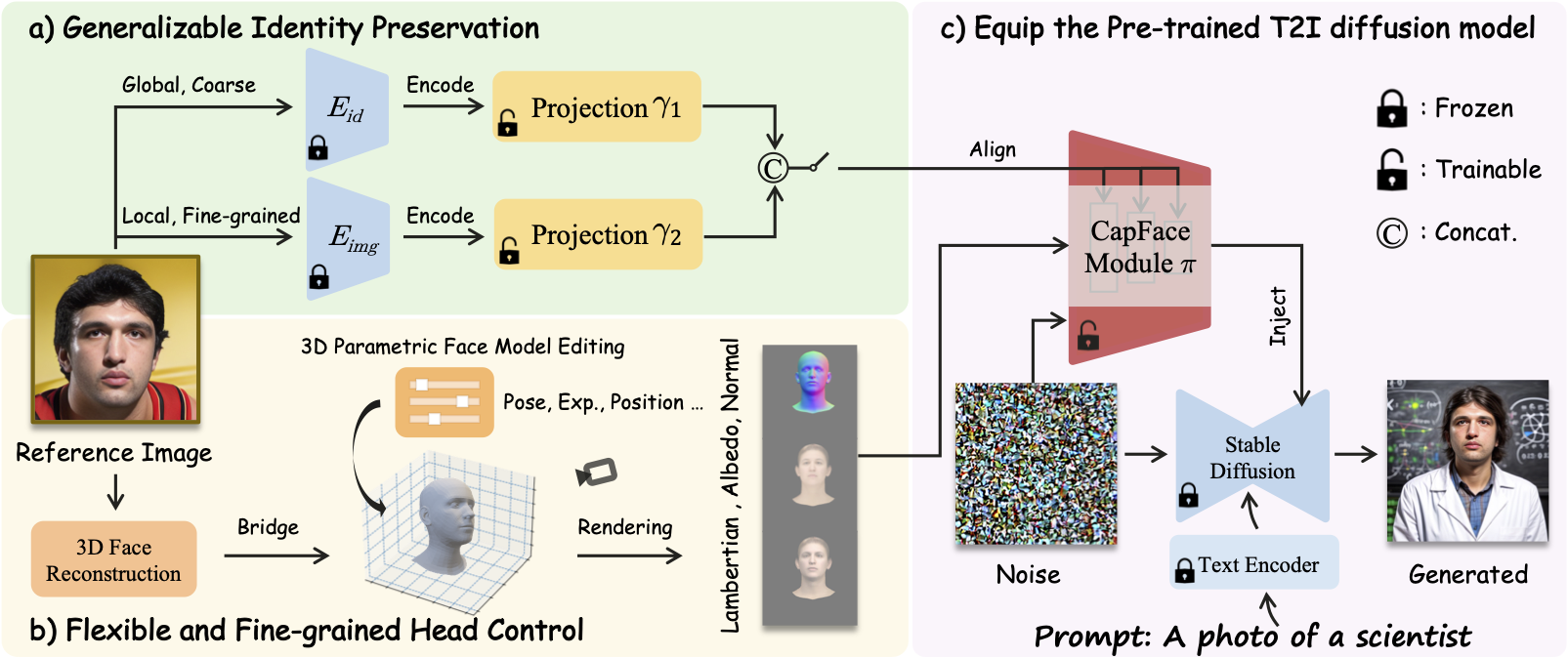

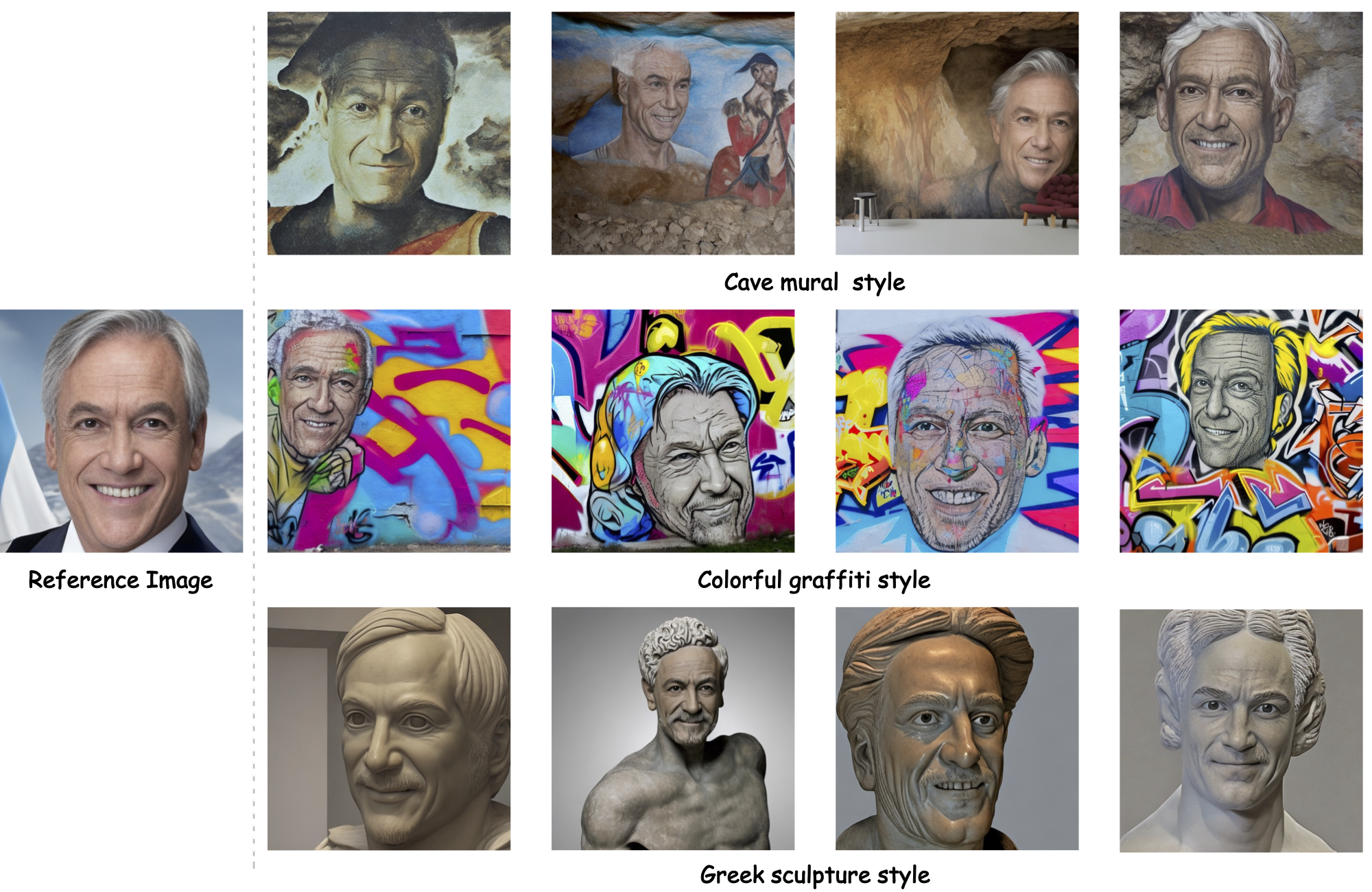

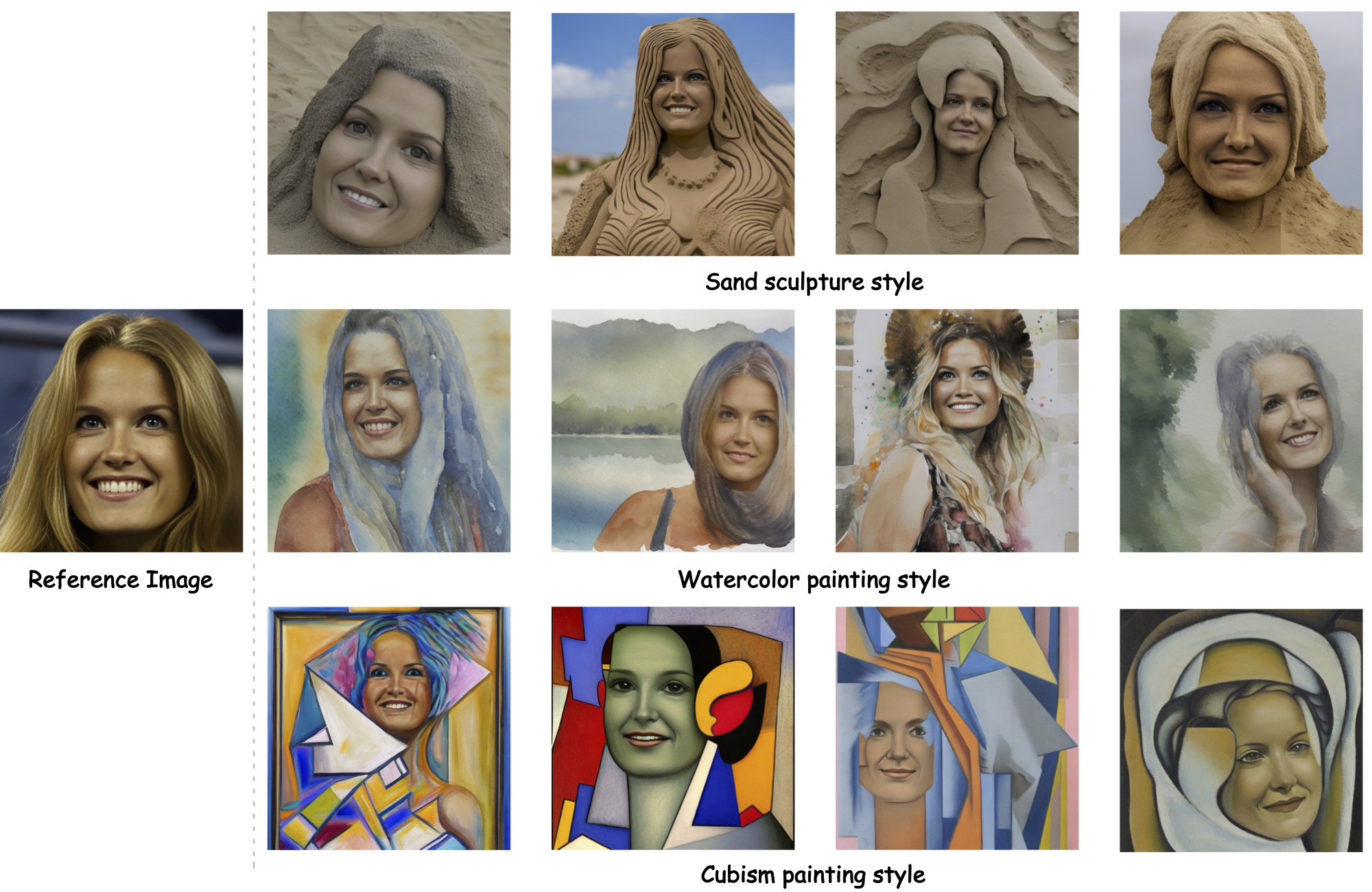

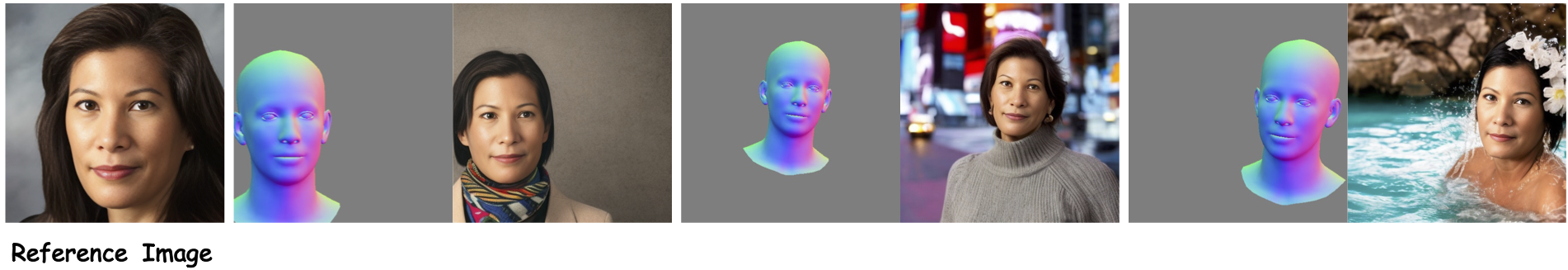

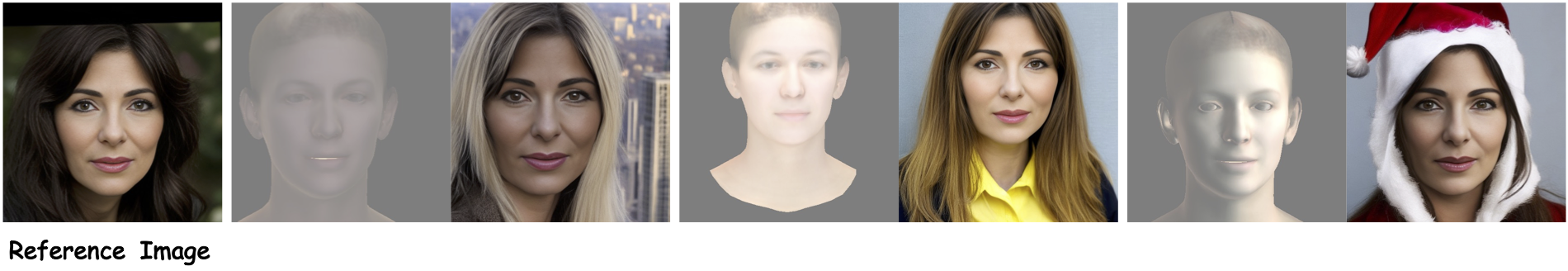

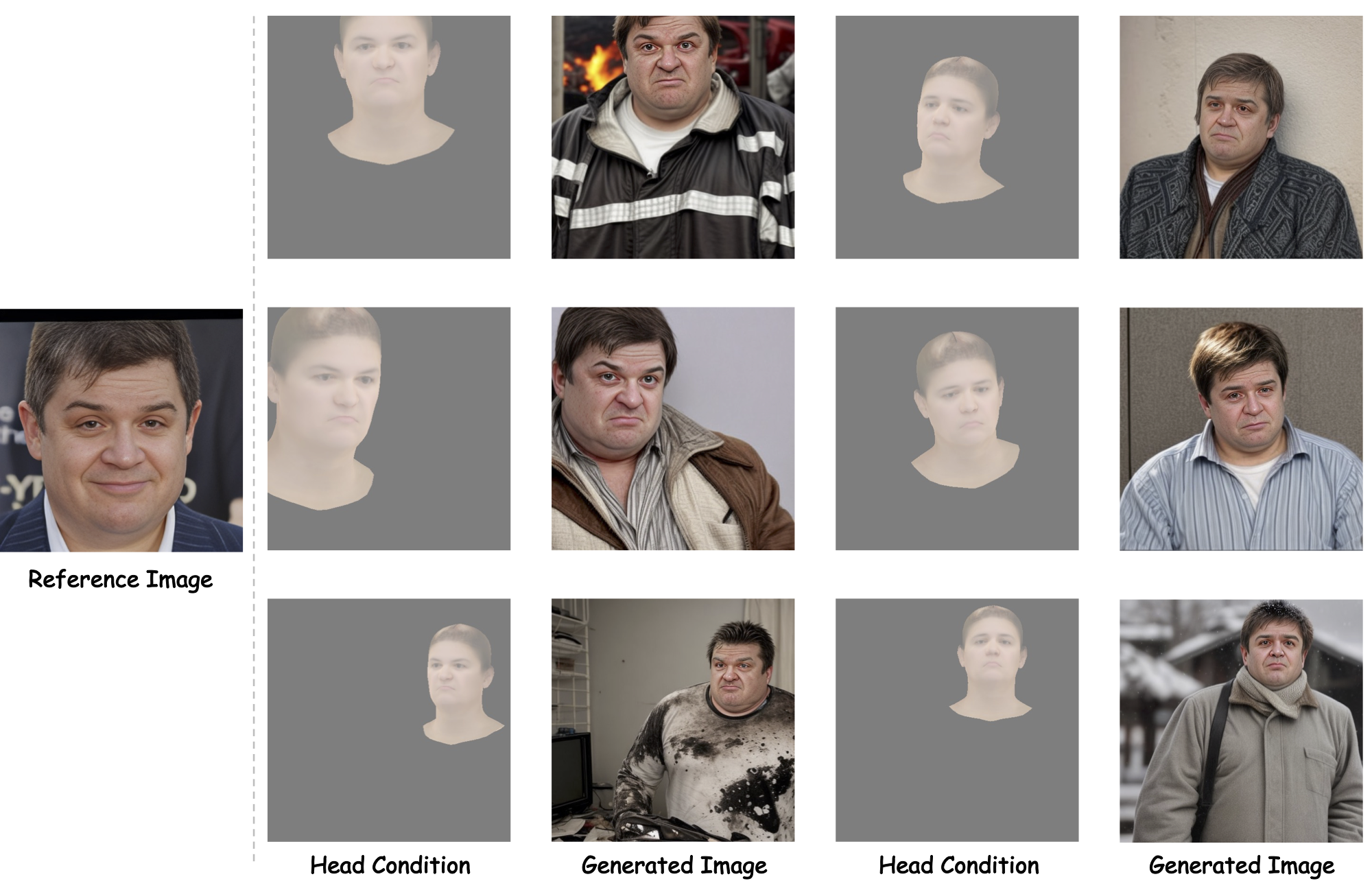

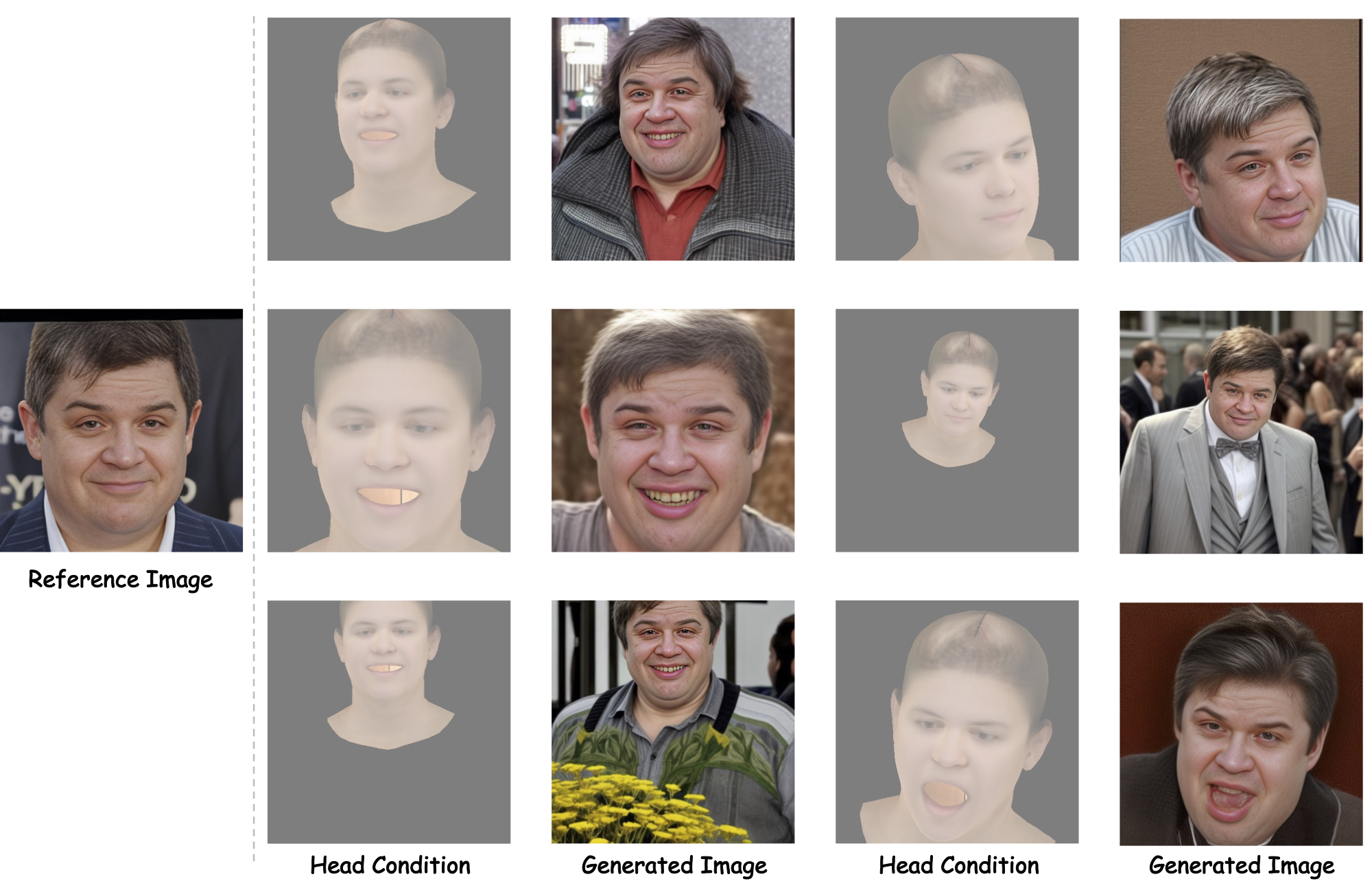

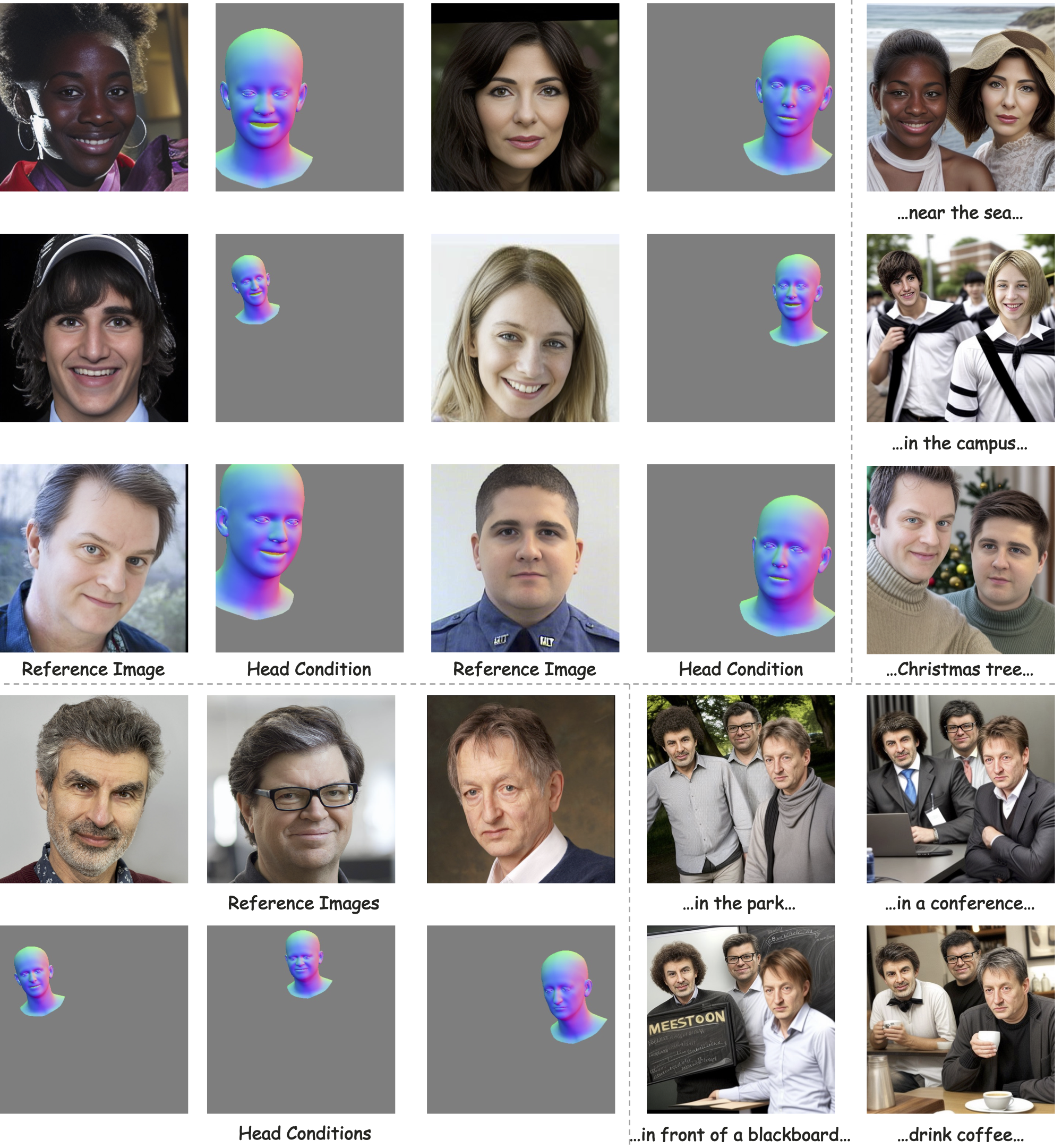

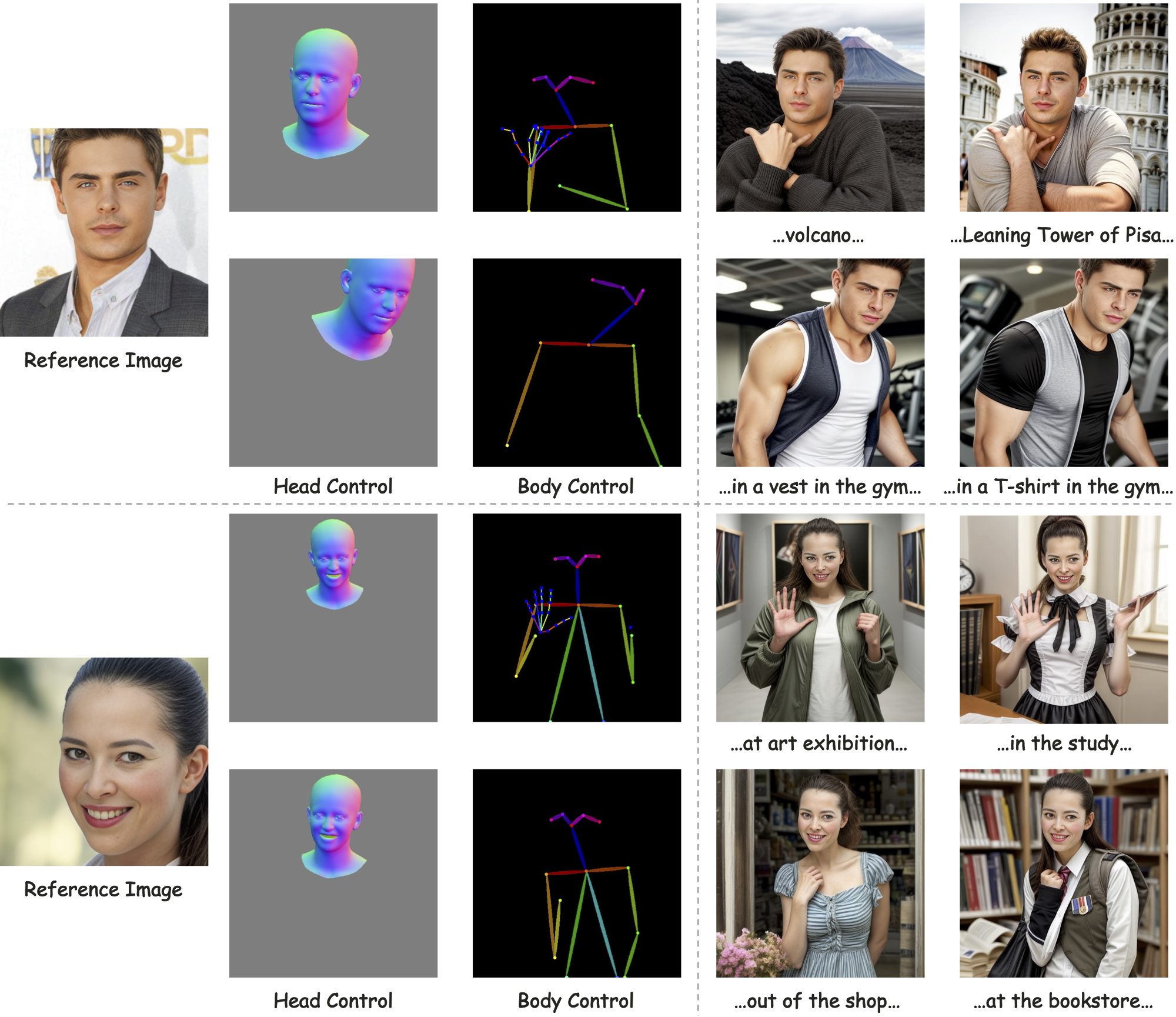

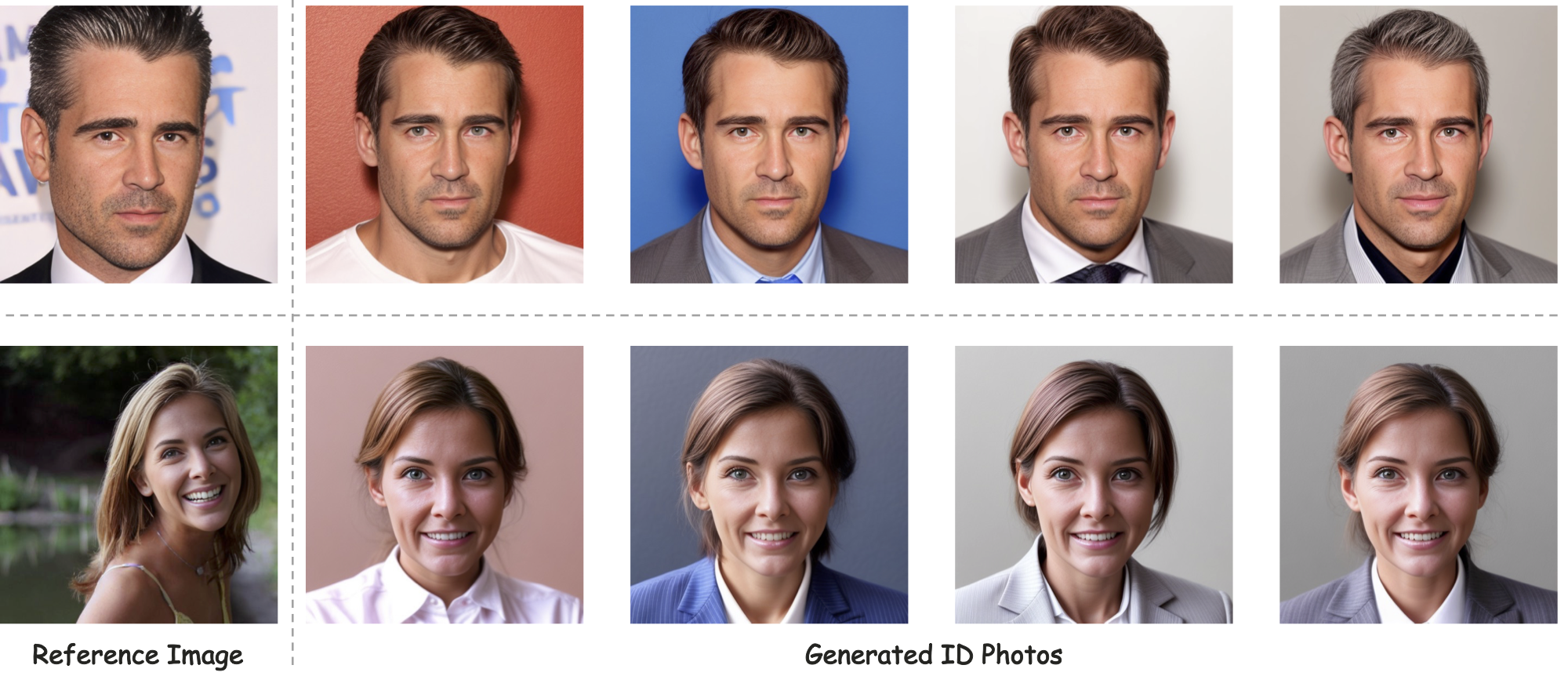

We concentrate on a novel human-centric image synthesis task, that is, given only one reference facial photograph, it is expected to generate specific individual images with diverse head positions, poses, and facial expressions in different contexts. To accomplish this goal, we argue that our generative model should be capable of the following favorable characteristics: (1) a strong visual and semantic understanding of our world and human society for basic object and human image generation. (2) generalizable identity preservation ability. (3) flexible and fine-grained head control. Recently, large pre-trained text-to-image diffusion models have shown remarkable results, serving as a powerful generative foundation. As a basis, we aim to unleash the above two capabilities of the pre-trained model. In this work, we present a new framework named CapHuman. We embrace the ``encode then learn to align" paradigm, which enables generalizable identity preservation for new individuals without cumbersome tuning at inference. CapHuman encodes identity features and then learns to align them into the latent space. Moreover, we introduce the 3D facial prior to equip our model with control over the human head in a flexible and 3D-consistent manner. Extensive qualitative and quantitative analyses demonstrate our CapHuman can produce well-identity-preserved, photo-realistic, and high-fidelity portraits with content-rich representations and various head renditions, superior to established baselines.

Our CapHuman stands upon the pre-trained T2I diffusion model. a) We embrace the "encode then learn to align" paradigm for generalizable identity preservation. b) The introduction of the 3D parametric face model enables flexible and fine-grained head control. c) We learn a CapFace module to equip the pre-trained T2I diffusion model with the above capabilities.

@inproceedings{liang2024caphuman,

author={Liang, Chao and Ma, Fan and Zhu, Linchao and Deng, Yingying and Yang, Yi},

title={CapHuman: Capture Your Moments in Parallel Universes},

booktitle={CVPR},

year={2024}

}